An Introduction to Anthropic’s Model Context Protocol (MCP) with Python

Are you looking for a standardized way to connect your AI applications to external tools? Then, the Model Context Protocol (MCP) might be exactly what you’re looking for. It’s like a USB-C port for AI systems.

MCP is very popular in the AI community, and for good reason. Many large companies like Block and Microsoft are already using MCP. Block has developed an MCP-based AI agent named Goose to automate software engineering tasks. Microsoft introduced a native integration of MCP in Windows in May 2025.

In our opinion, every data scientist and software engineer should understand how MCP works because it will be an integral part of nearly every software product. It is a universal and model-independent interface in the era of AI.

In this article, we explain what MCP is and why it is more than just hype. In addition, we will implement a simple MCP client and server in Python.

Let’s take a closer look at MCP.

What is the Model Context Protocol?

MCP is an open protocol introduced by Anthropic in late 2024 with the goal to standardize how AI applications can provide context to large language models (LLMs).

Similar to a USB-C port, MCP provides a standardized way to connect AI models (chatbots, AI assistants, IDEs, …) to external data sources, tools, and systems. With MCP you can make your AI apps smarter and more functional.

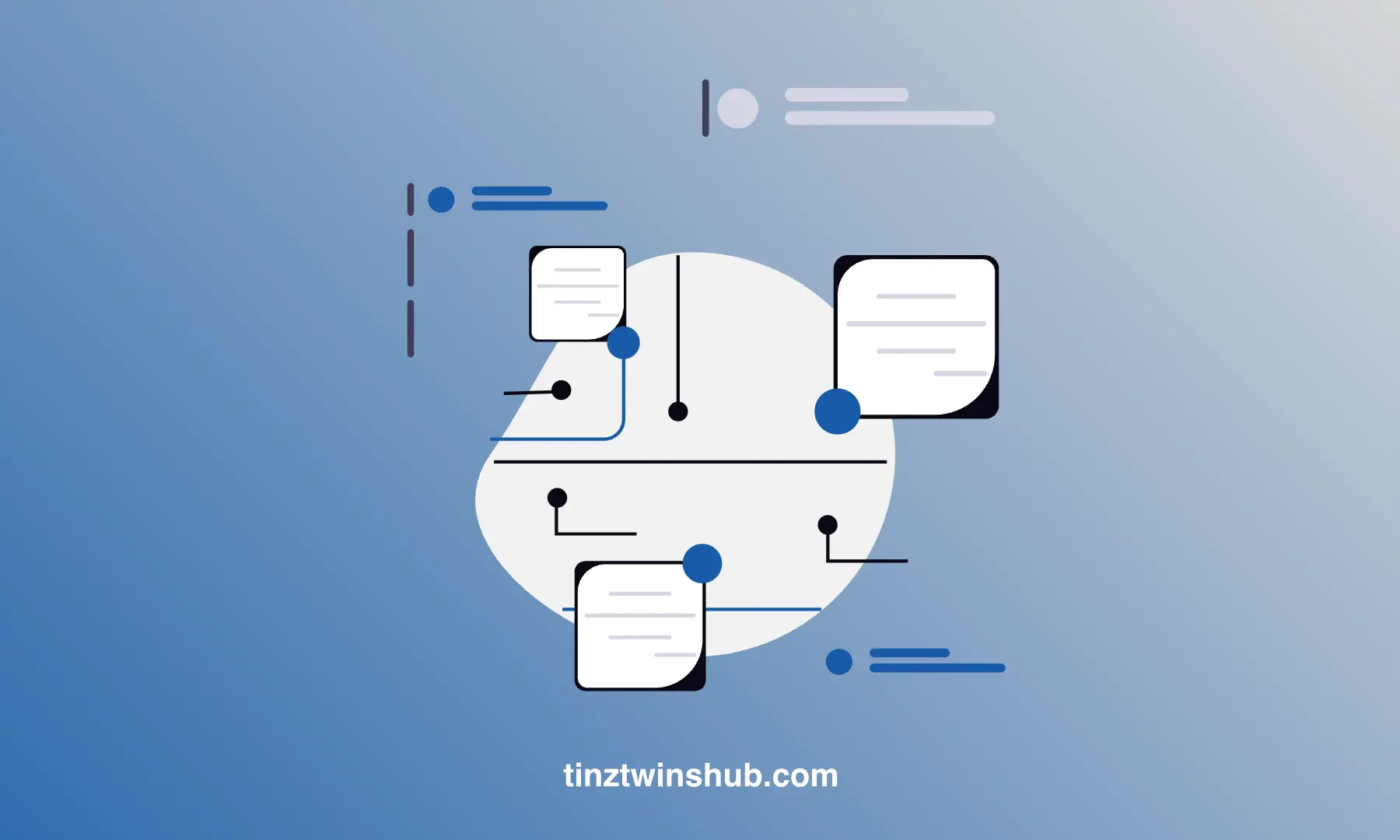

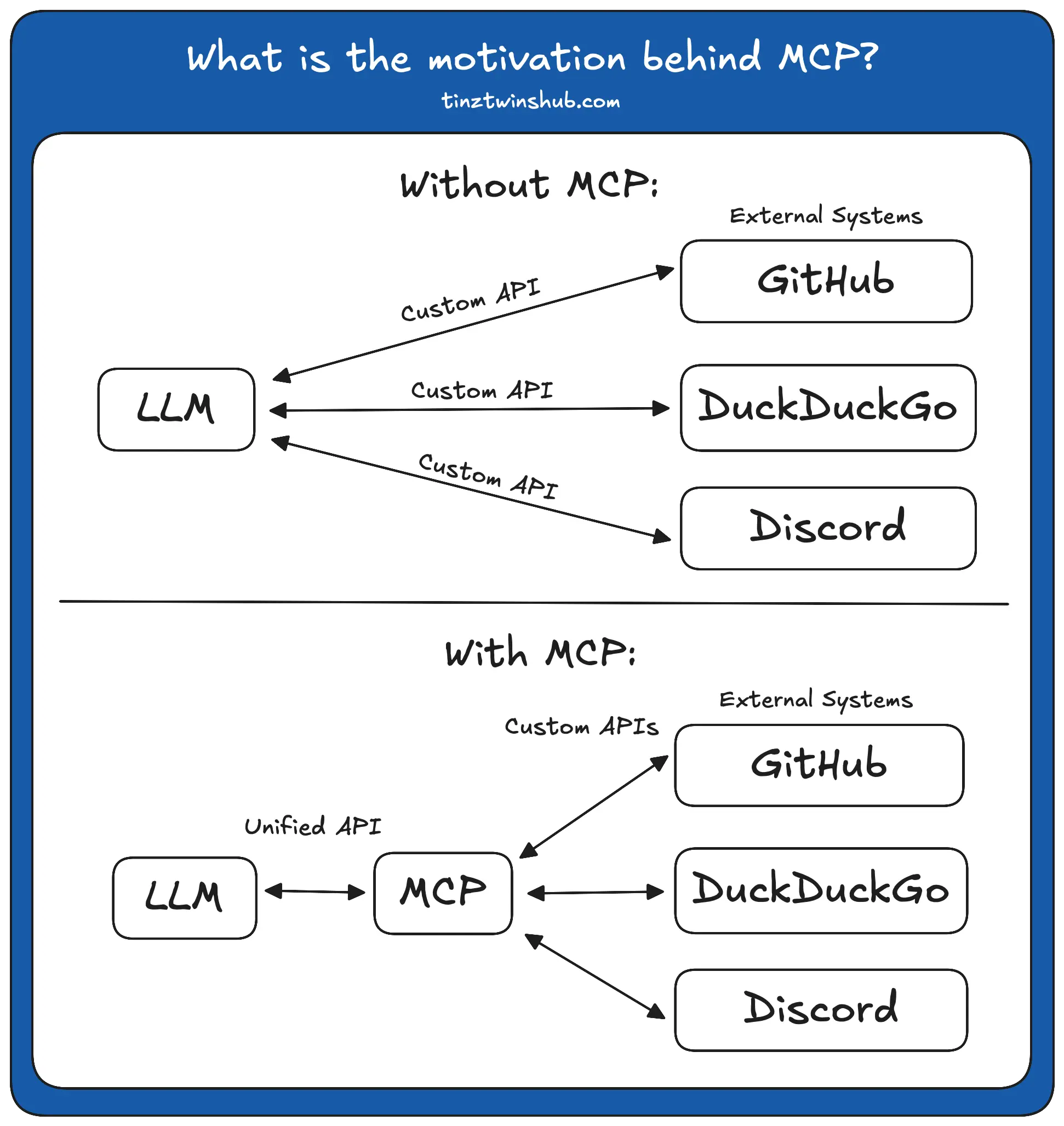

In AI applications, LLMs often need to interact with external data and tools. You can see without MCP, it is an “m×n” problem. You have m different AI applications and n different external systems (GitHub, DuckDuckGo, …). So you need to build “m×n” different integrations.

This complexity leads to inconsistent implementations and a lot of duplicated work. In simple terms, “bad software”.

MCP addresses this problem and turns it into an “m+n” problem. Developers build m MCP clients for their AI applications, and tool creators build n MCP servers. Once you create an MCP server, you can use it multiple times.

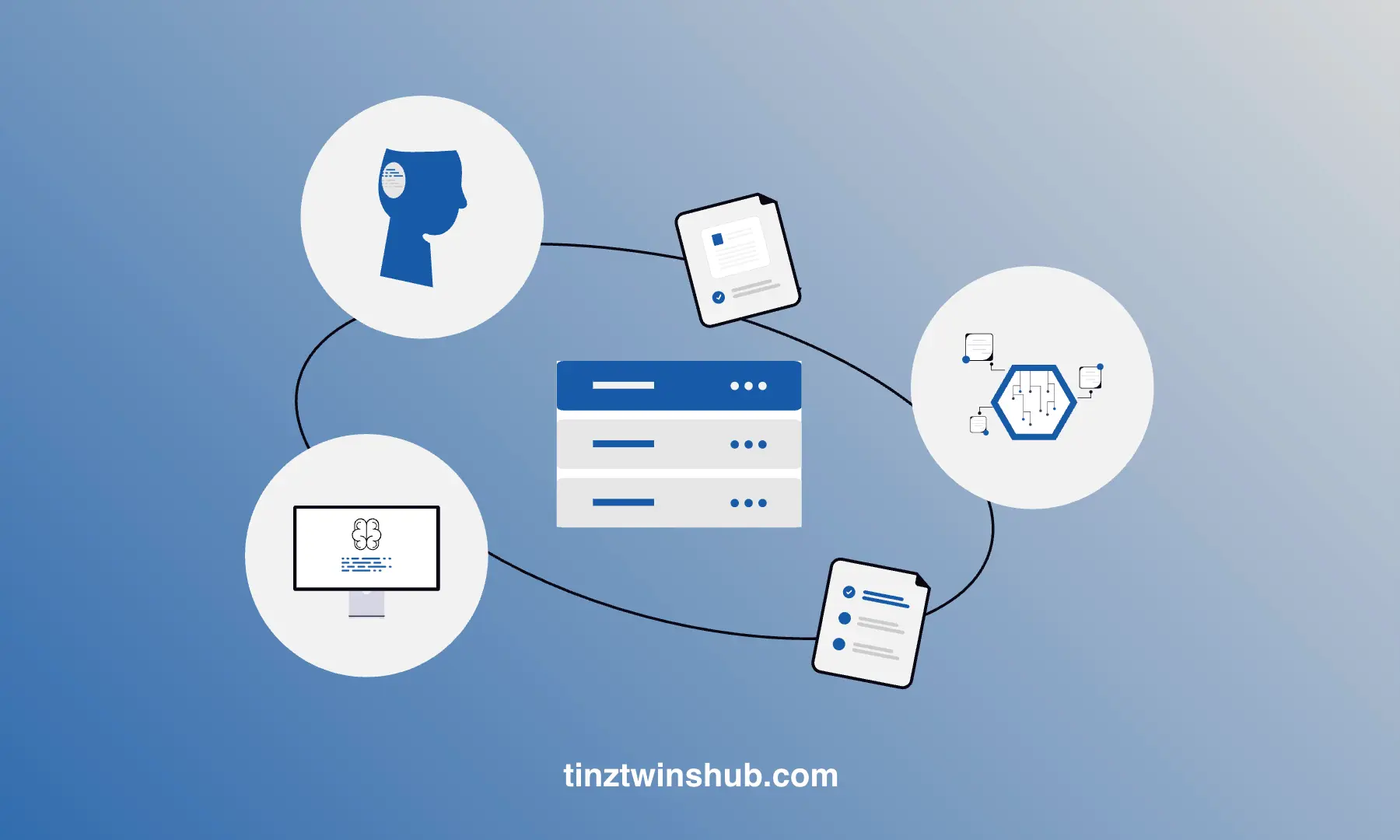

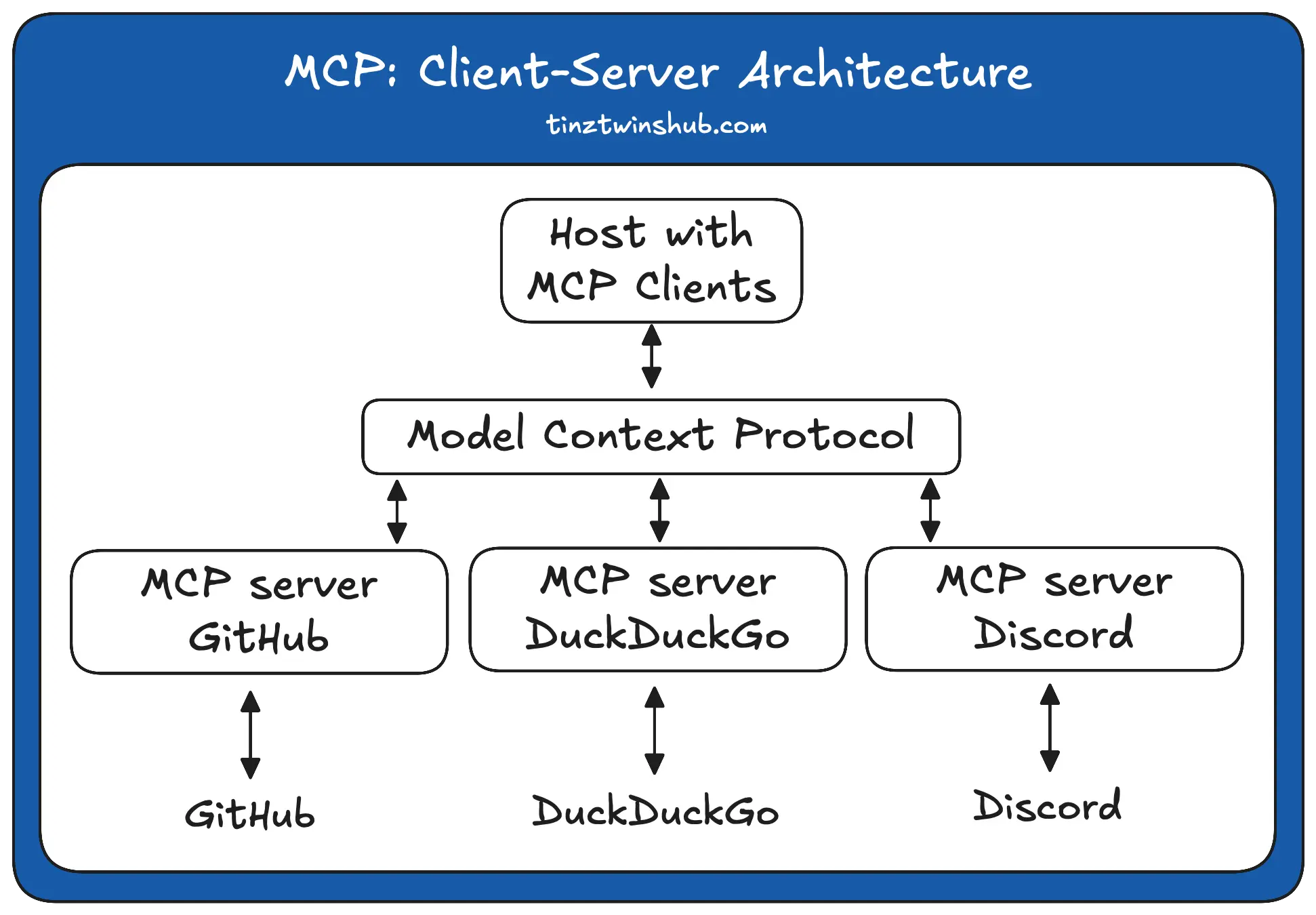

MCP uses a client-server architecture where a host application can connect to multiple MCP servers.

-

MCP Hosts: AI applications like Claude, Goose, IDEs, or custom AI apps that want to access external systems

-

MCP Clients: Live inside the hosts, maintain 1:1 connections with servers

-

MCP Servers: External programs that provide tools, resources, and prompts to clients

MCP clients invoke tools, query for resources, and interpolate prompts. MCP servers expose tools, resources, and prompts.

🤔 But what are tools, resources, and prompts, and who controls them?

- Tools (Model-controlled): The AI model decides when the best time to invoke functions (tools). It is known as function / tool calling. Think of it like POST endpoints.

- Resources (Application-controlled): These are data sources exposed to the application like files and database records. Unlike tools, resources don’t perform significant computation or have side effects. Think of it like GET endpoints.

- Prompts (User-controlled): These are pre-defined templates to interact with LLMs.

You can build MCP clients and servers in various languages (Python, TypeScript, Java, Kotlin, C#, and Swift). In this article, we create a simple server and client in Python.

MCP Server

An MCP server is the bridge between the MCP world and external systems. In this context, servers expose external capabilities according to the MCP specification.

MCP supports two transport mechanisms to handle the communication between clients and servers:

- Stdio transport (standard input/output): You use it when the client and server run on the same host. It’s ideal for local processes.

- HTTP with SSE transport (server-sent events): You use it when the client connects to an external server via HTTP. It uses HTTP POST for client-to-server messages.

In the following, you can see an example of an MCP server (stdio transport) using the MCP Python SDK:

from mcp.server.fastmcp import FastMCP

from openbb import obb

mcp = FastMCP("MCP Server Example")

# Add a resource, e.g. a greeting of a user

@mcp.resource("greeting://{name}")

def get_greeting(name: str) -> str:

"""Get a personalized greeting"""

return f"Hello, {name}!"

@mcp.tool()

def get_stock_price(ticker: str) -> str:

"""Return the stock price of a specific ticker"""

quote_data = obb.equity.price.quote(symbol=ticker, provider="yfinance")

return f"Stock price {ticker}: {quote_data.results[0].last_price}"

@mcp.prompt()

def echo_prompt(message: str) -> str:

"""Answer a question as a stock market analyst"""

return f"You are a helpful AI assistant. \

You can help with general queries \

and questions about the stock market. \

Answer the following question: {message}"

if __name__ == "__main__":

mcp.run(transport="stdio")

💡 You need to install the Python packages openbb and mcp first.

Our server offers a resource that provides a personalized greeting, a tool to get the stock price for a specific ticker, and an echo prompt. In addition, the server uses standard input/output (stdio) as transport mechanisms.

Run the MCP server: mcp run mcp_server.py

Examples for MCP Servers:

- PostgreSQL, GitHub, Discord, and more.

MCP Client

An MCP client is part of a host application and communicates with a specific MCP server. Clients are responsible for managing connections, discovering capabilities, forwarding requests, and handling responses.

In the following, you can see an example of an MCP client using the MCP Python SDK:

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

import asyncio

async def run():

# Commands to create server parameters for stdio connection

server_params = StdioServerParameters(

command="python",

args=["mcp_server.py"], # path to the mcp server file

env=None, # Optional environment variables

)

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

# Initialize the connection

await session.initialize()

# List available tools

tools = await session.list_tools()

print(tools)

# List available resources

resources = await session.list_resources()

print(resources)

# List available prompts

prompts = await session.list_prompts()

print(prompts)

# Call a tool

result = await session.call_tool("get_stock_price", arguments={"ticker": "TSLA"})

print(result)

# Read a resource

content, mime_type = await session.read_resource("greeting://tinztwins")

print(mime_type)

print(content)

# Get a prompt

prompt = await session.get_prompt(

"echo_prompt", arguments={"message": "What are bollinger bands?"}

)

print(prompt)

if __name__ == "__main__":

asyncio.run(run())

First, the client script outputs the available tools, resources, and prompts. Then, the tool returns the current stock price of Tesla. The resource request outputs “Hello, tinztwins!”. Finally, the client script prints the pre-defined prompt with the message.

Run the MCP client: python mcp_client.py

No errors? Yeah, everything is working correctly! 🥳

Examples for MCP Clients:

- Claude Desktop, Goose, VSCode, Cursor, Continue, and more.

Conclusion

MCP is the REST API for LLMs. It will simplify the development of context-aware AI apps and make them more maintainable. In addition, AI application developers can connect their apps to any MCP server with minimal work. Tool and API developers can build an MCP server once, and everyone can use it.

With MCP, AI product development teams have a clear separation between the different parts of software. As a result, MCP will lead to more powerful AI apps. We are excited to see how the development continues.

Now that you have learned the basics of MCP, start building your own AI apps. Share your project on social media and tag us so we can see what you’re working on.

Resources

- Model Context Protocol Documentation

- Model Context Protocol GitHub

- Building Agents with Model Context Protocol

💡 Do you enjoy our content and want to read super-detailed guides about AI Engineering? If so, be sure to check out our premium offer!