Run GenAI Models locally with Docker Model Runner

Are you interested in running large language models (LLMs) locally on your computer? If so, Docker Model Runner might be interesting for you!

GenAI is becoming more and more important in many software development projects. For this reason, it’s essential to know how to build and run LLMs locally. There are already tools like Ollama that make this possible. A few days ago, Docker also introduced a way to run LLMs locally called Docker Model Runner.

First, a short recap: what is Docker? Docker makes it easy to package, distribute, and run applications. Many software engineers use Docker to package software applications into containers to run applications consistently across different environments. This has simplified application development and deployment for years.

We have tried Docker Model Runner. In this article, we’ll show you how to use it in your existing Docker workflow.

What is Docker Model Runner?

Docker Model Runner is a new feature for deploying and running AI models. It makes AI model execution as simple as running a container. With Docker Model Runner, developers have a fast, low-friction way to run LLMs locally, making it easier to use AI models in their existing development workflow.

In addition, Docker Model Runner is a part of Docker Desktop and includes an inference engine. This inference engine is built on top of llama.cpp and is accessible through the familiar OpenAI API. No extra tools, no additional setup, and no separate workflows. All in one place, in Docker Desktop.

Docker Model Runner is a good choice for developers who don’t want to use cloud-hosted LLMs due to privacy concerns. Another reason to use local LLMs is to speed up your development process with minimal latency. In addition, you can also save API costs.

Docker Model Runner supports native GPU acceleration for Apple Silicon. According to Docker, other platforms will follow in later releases. All these features are available as a Beta in Docker Desktop 4.40.

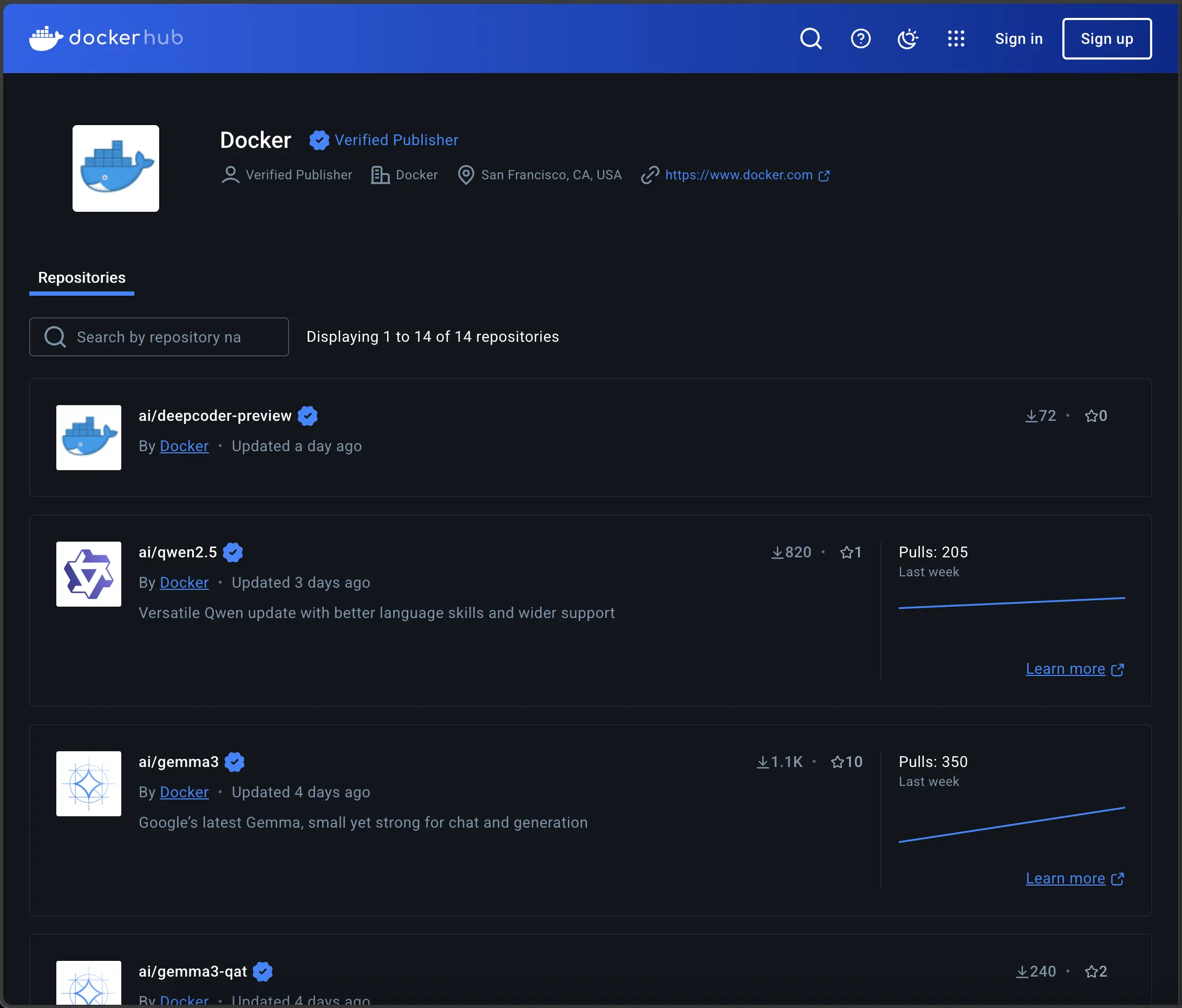

Docker Hub for LLMs

With Docker Model Runner, Docker standardizes the packaging of AI models as OCI artifacts. It’s an open standard for distributing and versioning models using the same registries and workflows as containers.

So, you can easily pull models from Docker Hub just like you do with images. Later, it should also be possible to push your own models. Some powerful LLMs are already available on Docker Hub, such as Gemma 3, Llama 3.3, Phi 4, and Mistral Nemo.

We think this is the start of something big, and we are sure data scientists and developers will love it.

Next, let’s look at the available CLI commands.

Docker Model Runner CLI

-

docker model status: Check if the Docker Model Runner is active. -

docker model help: List all available commands and display help information. -

docker model pull <model-name>: Pull the model from Docker Hub. -

docker model list: List all pulled models on your computer. -

docker model run <model-name> "Hello World.": Run the model and interact with it using a one-time prompt. -

docker model run <model-name>: Run the model and interact with it using chat mode. -

docker model rm <model-name>: Remove the model from your computer.

Example with Mistral Nemo

Let’s demonstrate how easy it is to use Docker Model Runner with Mistral Nemo. We’ll run the example on a MacBook Pro M4 Max.

First, make sure you have installed Docker Desktop 4.40 or later. Docker Model Runner is currently only available for Apple Silicon Macs. According to Docker, other platforms will follow.

Next, check if the Docker Model Runner is enabled.

Enable Docker Model Runner:

- Open the settings menu in Docker Desktop.

- Navigate to Features in development.

- Set the checkmark for Docker Model Runner. (Maybe the checkmark is already set.)

Pull the model Mistral Nemo:

docker model pull ai/mistral-nemo

The models can be large so the initial download might take some time. However, once downloaded, the models are stored locally for faster access. The models are loaded into memory only when needed and unloaded when not in use to save resources.

One-time prompt:

docker model run ai/mistral-nemo "Hello World."

The model responds to the prompt and ends the conversation.

Chat mode:

docker model run ai/mistral-nemo

The model opens a chat, allowing you to ask more questions. It’s like having ChatGPT on your local machine. With the difference that no data leaves your computer, it’s perfect for privacy-first use cases.

Conclusion

We think Docker Model Runner will be a huge success. The reason is that many developers and data scientists are already using Docker. In addition, it is easy for developers to integrate AI models into their daily workflows because it is as simple as running a container. Docker brings AI models to an ecosystem where thousands of developers already are.

We will test Docker Model Runner intensively and share our experiences on our social media channels. So feel free to follow us on X and Threads.

💡 Do you enjoy our content and want to read super-detailed guides about AI Engineering? If so, be sure to check out our premium offer!