Mistral’s Codestral - Create a local AI Coding Assistant for VSCode

A lot has happened in the AI field since the initial release of ChatGPT. Today, state-of-the-art large language models (LLMs) can write code and support developers in programming. So, it’s time to use these new powerful models in your daily coding workflow.

Mistral AI recently unveiled its first-ever code model, called Codestral. In this article, we use this new model to create a local AI coding assistant and integrate it into VSCode. 100 % local! This way, you don’t have to pay for coding assistants like GitHub Copilot. Thanks to the open-source community.

Let’s get started!

What is Mistral’s Codestral?

Mistral AI, a European AI start-up, released Codestral on 29 May 2024 as an open-source model. It is explicitly designed for code generation tasks. In addition, the model can help developers worldwide to write code faster and better.

Here are the key points:

- Trained on a dataset with 80+ programming languages, including popular ones like Python, Java, C, C++, JavaScript, …

- It can complete coding functions and write tests.

- 22B parameters and 32k context window

- Codestral outperforms existing code models with higher hardware requirements in several benchmarks.

- Available on HuggingFace and Ollama

Next, we create our local AI coding assistant!

Create a local AI Coding Assistant with Codestral

Not a long time ago, LLMs were only accessible via an API of the big providers like OpenAI or Anthropic. However, we want to create a local coding assistant, so we need a tool to run our model locally.

A great tool to do that is Ollama. The advantage of local models is that the providers do not have access to your chat data.

Step 1 - Download Ollama and Mistral’s Codestral

Ollama is a free open-source tool to run LLMs locally with a command-line interface. You can run many state-of-the-art open-source LLMs like Llama 3, Phi 3, or codestral. This tool makes it possible to run open-source LLMs on your own hardware in a few minutes.

First, you need to download Ollama. It is available for macOS, Linux, and Windows.

If you have installed it successfully on your computer, you can use the Ollama command line tool to download an open-source model of your choice. In our case, we download the model codestral:

ollama pull codestral

Then, you can run and chat with the model via the command line:

ollama run codestral

In addition, you can print a list of all your downloaded models:

ollama ls

# example output:

# NAME ID SIZE MODIFIED

# codestral:latest fcc0019dcee9 12 GB 18 hours ago

# starcoder2:3b f67ae0f64584 1.7 GB 2 weeks ago

# llama3:latest a6990ed6be41 4.7 GB 2 weeks ago

You can see that the codestral model has a size of 12 GB. We recommend a computer with 32 GB RAM if you plan to run it locally.

In addition, you can also try a smaller model like Llama 3 (8B) or Phi 3 (3.8B) if the larger model runs too slowly on your computer.

Next, we switch to VSCode!

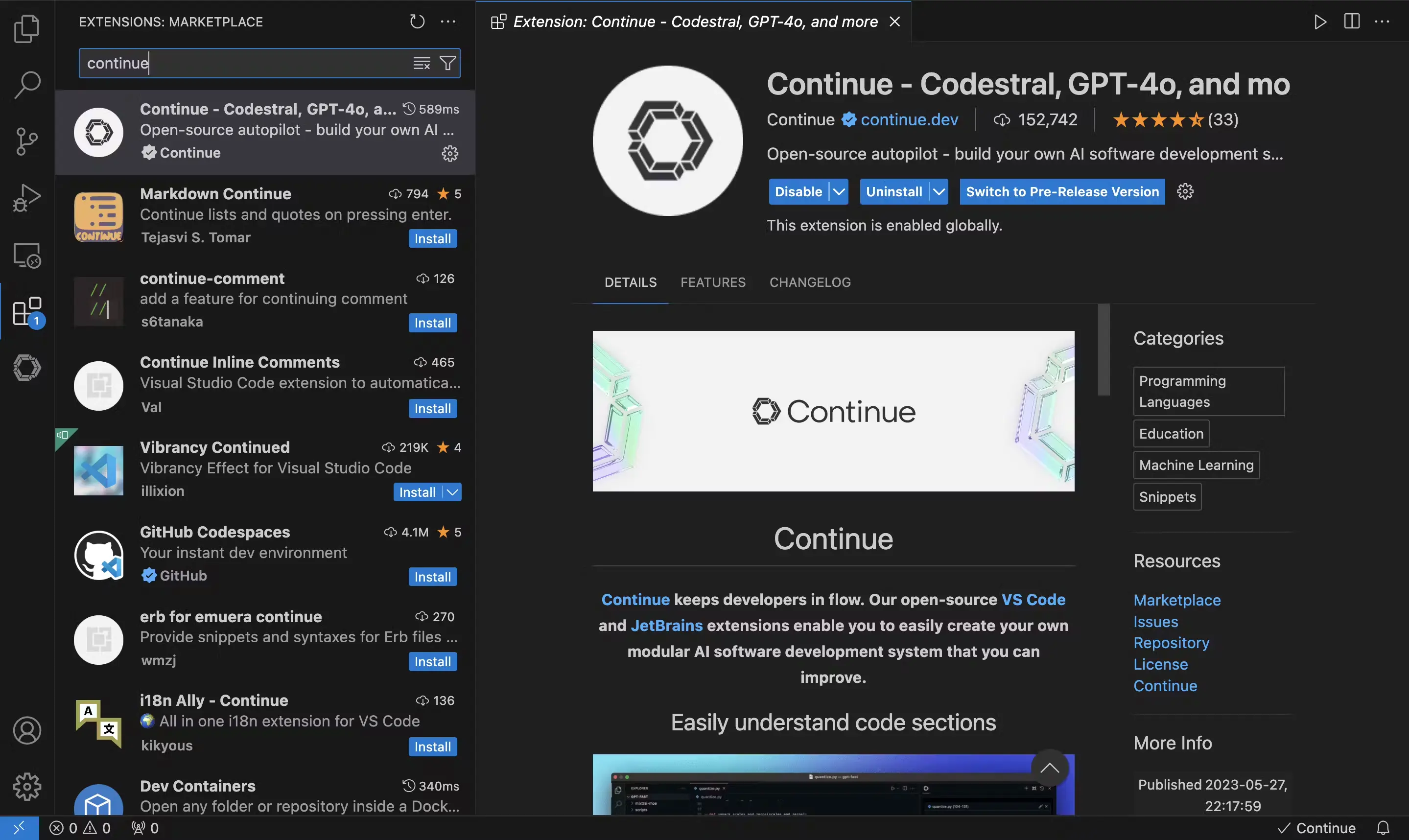

Step 2 - Download Continue VSCode extension

Continue is an open-source extension for VSCode and JetBrains that helps you create your own AI development environment.

You can install it from the VSCode extension marketplace in seconds. 👇🏽

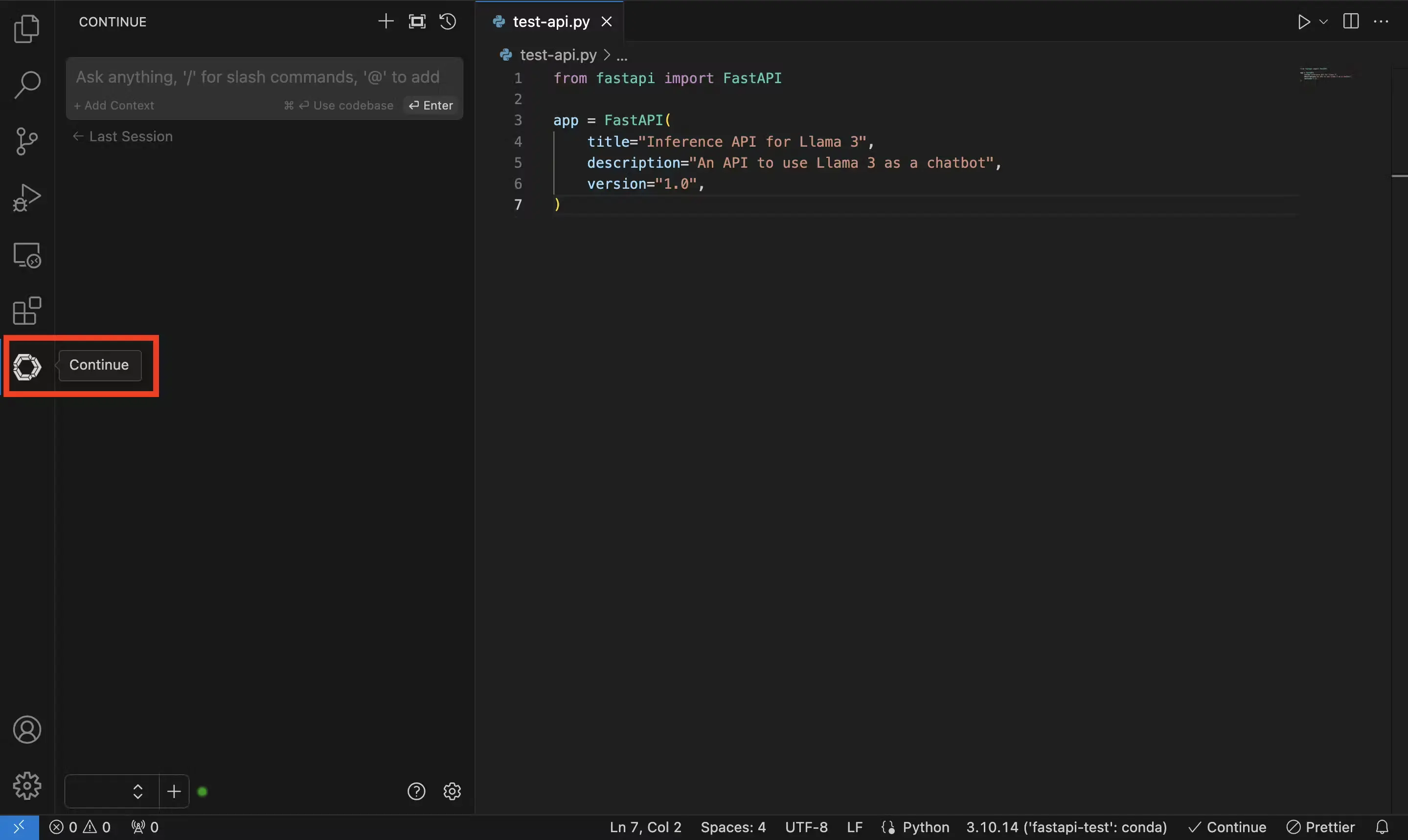

When the installation is complete, you will see the continue icon on the left side.

Great! Next, we can connect codestral with VSCode.

Step 3 - Connect VSCode with Codestral

You have two options to connect VSCode with codestral. The first option is to run codestral locally, and the second option is to use the official API. You can use the second one if you have trouble running the model locally.

Let’s look at the local option!

Connect with the local codestral model

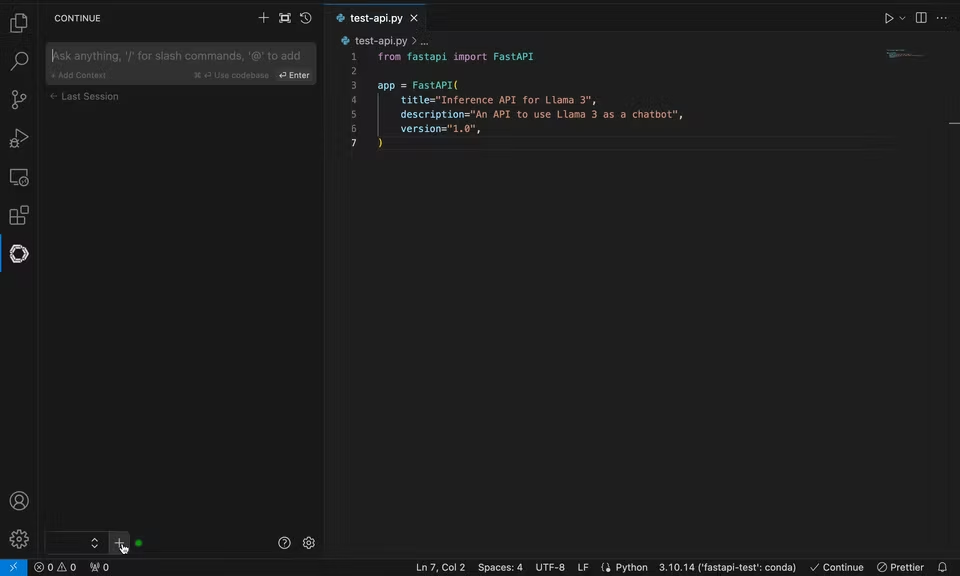

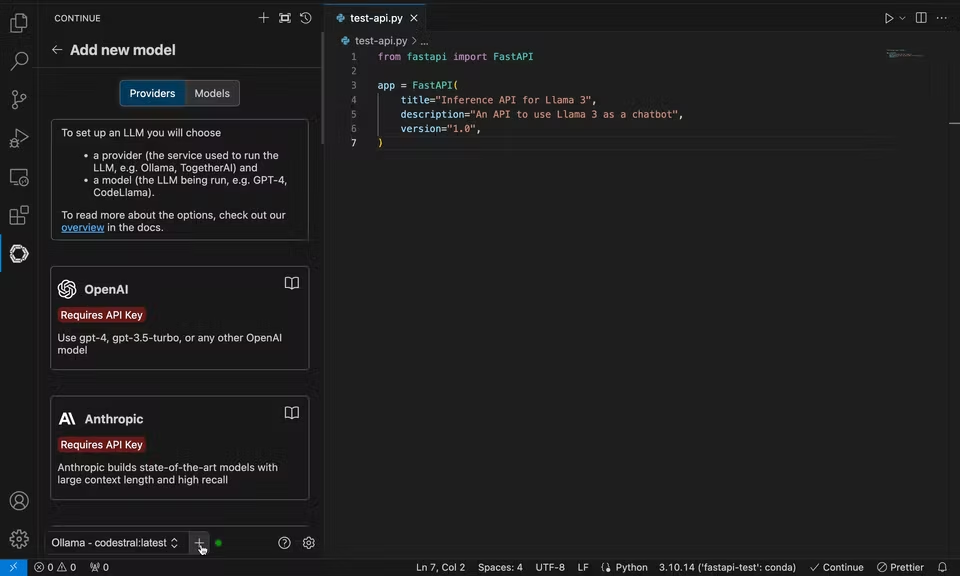

First, you have to click on the ‘+’ button. Then, select Ollama as your provider and choose the option ‘Autodetect’. Ollama will automatically populate the model list with all your local models.

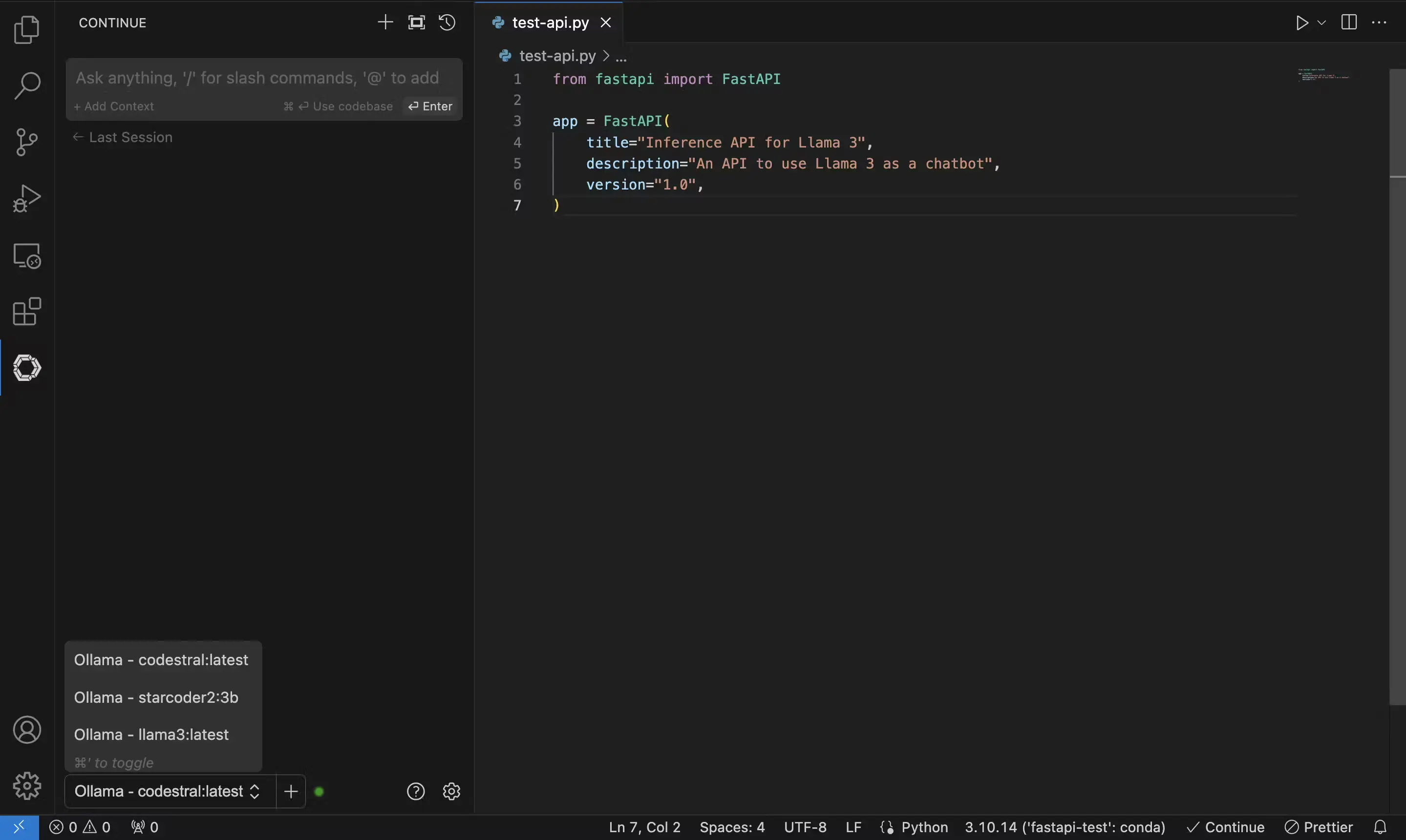

Next, you can click on the list with all available models. Then, you can select codestral:latest.

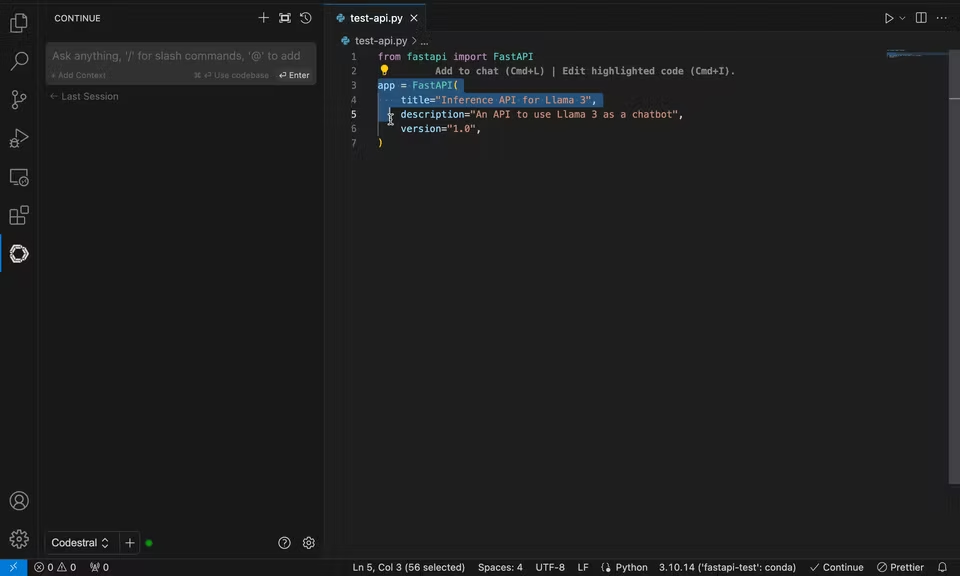

Now, everything is ready! You’ve created a local AI coding assistant for VSCode. Congrats!

If you have trouble running the model locally because of hardware constraints, then you can use the official API of Mistral to access codestral. During the beta period of 8 weeks, you can use codestral via API for free!

Connect via API with the codestral model

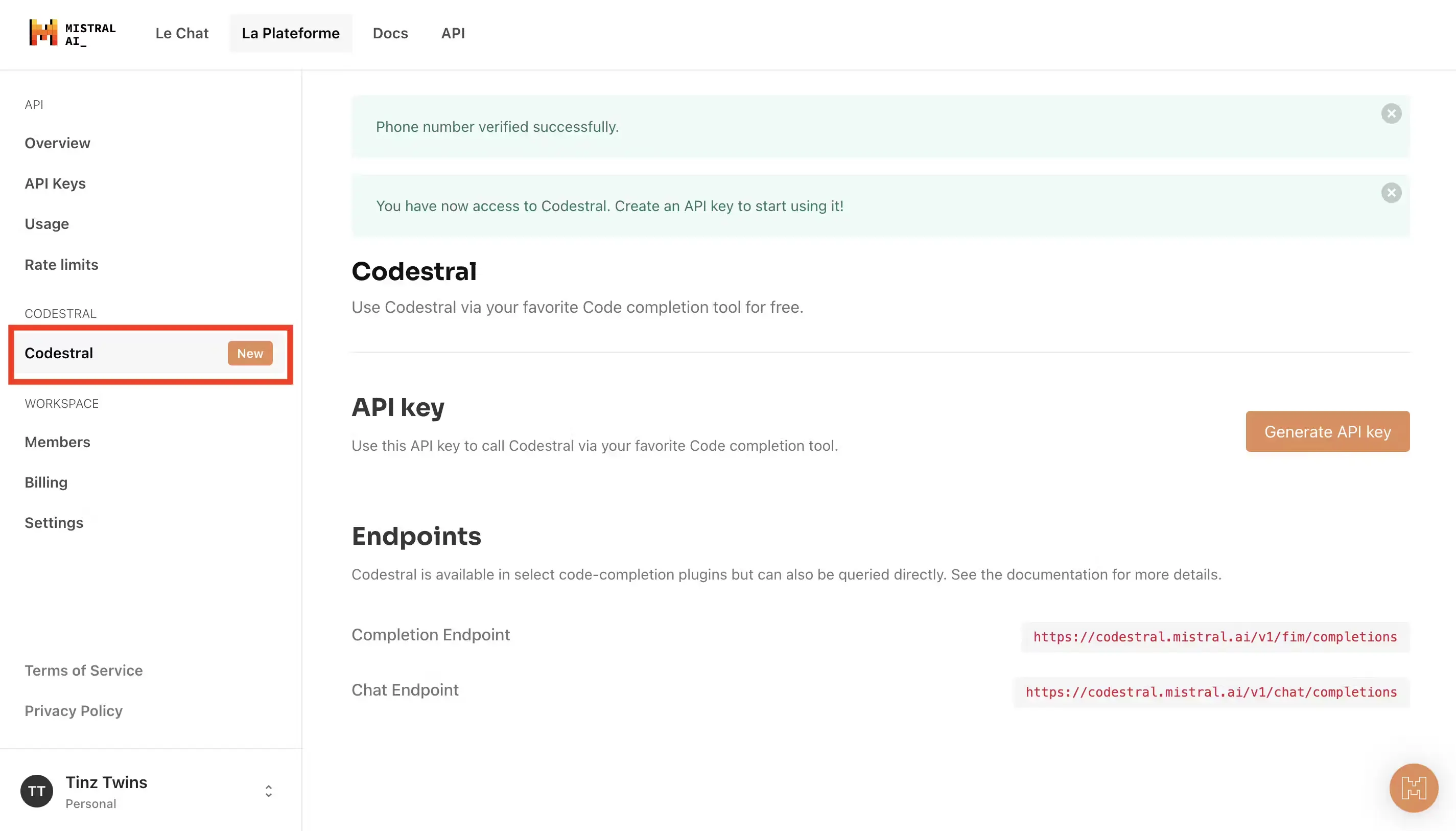

You need an API key to access codestral via API. For this, you have to create a Mistral AI account.

Log in to your Mistral AI account and go to account settings. Then, click on codestral and create a new API key.

Click on ‘Generate API key’ and copy the key!

Next, you’ve to set the API key in VSCode. You can do this as follows:

Paste your API key in the field and select codestral as the model.

That’s all! Now, you can use codestral via API as your personal coding assistant.

Let’s look at practical examples!

Step 4 - Use Codestral as your personal coding assistant

In this section, we show you two examples of how you can use your AI coding assistant.

Explain code

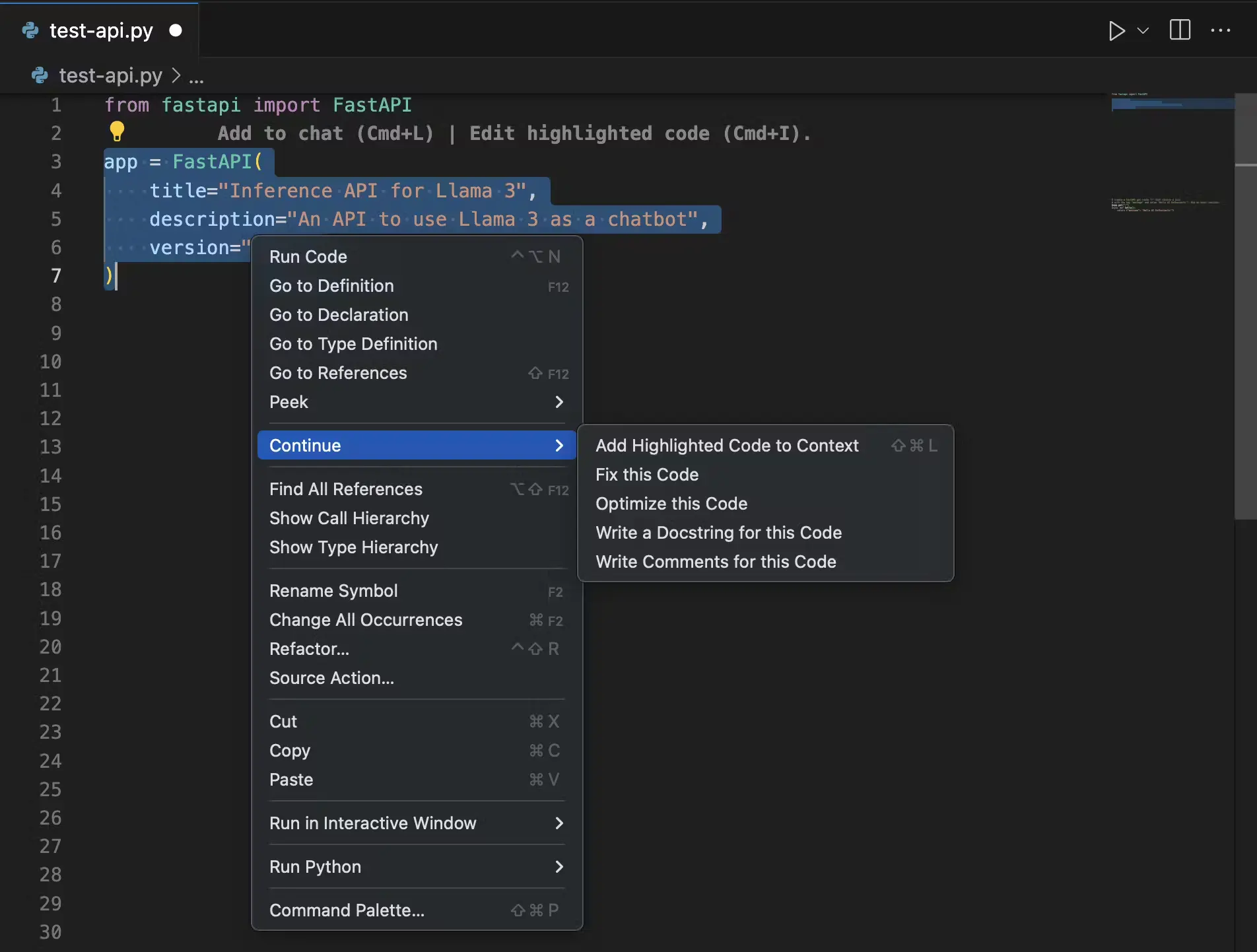

You can mark a section in your code and add it to the context of the model. You can do this via a shortcut or with a right-mouse click.

Then, you can ask the model anything about the codebase, for example, “Explain the following code!”.

This is a convenient solution to chat with parts of your codebase. So, you don’t need to switch to StackOverflow or Google. You can ask the questions to your coding assistant. If you run the model locally, you can do it even when you have no access to the internet. Brilliant, right?

Autocomplete code

Next, we look at the autocomplete functionality. When you start coding, your assistant automatically generates suggestions for you. This will increase your coding speed.

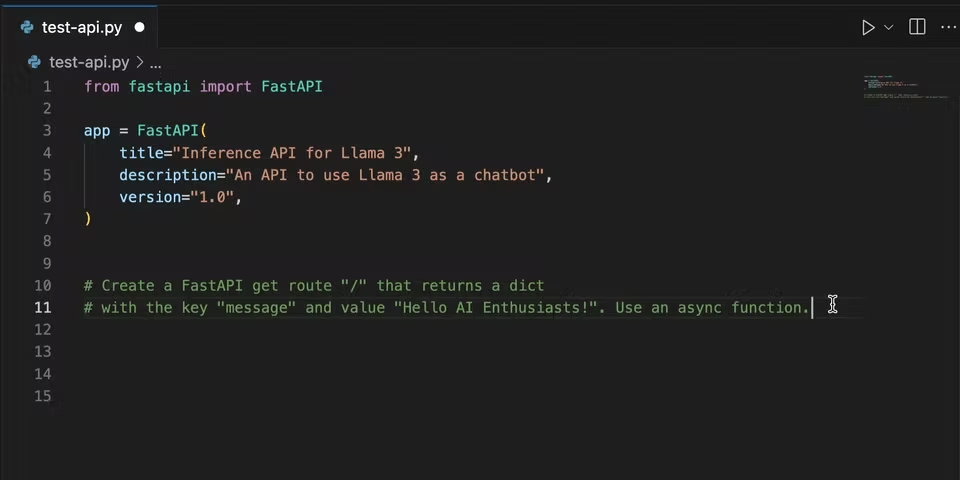

For example, you can write a comment with an instruction for the model. Then, the model suggests an example function based on your instruction.

Look at the following example:

Imagine how this functionality can speed up your daily coding process. With Mistral’s codestral it is even more precise than with previous models.

Give it a try!

Conclusion

In this article, we’ve shown how you can easily create a local coding assistant in VSCode. If your computer does not match the hardware requirements you can also use an API, for example, Mistral’s API.

We have been using Mistral’s codestral for a few days now and are impressed how precisely the model works. Nowadays, every developer should use AI-powered coding assistants. The capabilities are impressive compared to a year ago.

Thanks so much for reading. Have a great day!

💡 Do you enjoy our content and want to read super-detailed guides about AI Engineering? If so, be sure to check out our premium offer!